Kerberos has been an industry standard for authentication for many years and, as of 5.3, AMPS now ships with Kerberos support. AMPS Kerberos support is provided as one of the authentication mechanism options available via the libamps_multi_authentication module. Kerberos requires that an authentication token be generated and set by the client, so there are also client-side Kerberos authenticators implemented for each of the AMPS client libraries.

Kerberos has been an industry standard for authentication for many years and, as of 5.3, AMPS now ships with Kerberos support. AMPS Kerberos support is provided as one of the authentication mechanism options available via the libamps_multi_authentication module. Kerberos requires that an authentication token be generated and set by the client, so there are also client-side Kerberos authenticators implemented for each of the AMPS client libraries.

Before going any further, it’s important to note that for this post (and to use Kerberos in your environment), Kerberos infrastructure is a prerequisite. Setting up Kerberos infrastructure is beyond the scope of this article, and is something that is normally managed by a dedicated team.

Assuming that Kerberos is already set up for your environment, you will need the following for this demo to function:

- 2 Kerberos Service Principle Names (SPNs)

HTTP/hostname- For securing the AMPS Admin Interface (hostnamemust be the fully qualified host name where your AMPS instance is running)AMPS/hostname- For securing the AMPS Transports

- A Kerberos Keytab containing the above SPNs

- A user with Kerberos credentials (may be obtained via

kinit, via a Keytab for the user or automatically during logon which is often the case for Windows/Active Directory). Note that for configuring Replication Authentication a Keytab for the user that the AMPS server will authenticate as is required.

Configuring AMPS

As documented in the Configuration Guide the Authentication element can be specifed for the instance as a whole and/or for each Transport. In the below configuration Kerberos authentication has been enabled for all transports using the AMPS SPN and then overridden for the Admin interface to use the HTTP SPN. Note that in addition to the Authentication element, libamps_multi_authentication.so must be specified as a Module in the Modules section as it is not loaded into AMPS by default.

The below AMPS configuration uses environment variables for the Kerberos configuration elements, thus before starting AMPS using this config the following variables need to be set:

AMPS_SPN- Set toAMPS/hostnamewherehostnameis the fully qualified name of the host AMPS is running on.HTTP_SPN- Set toHTTP/hostnamewherehostnameis the fully qualified name of the host AMPS is running on.AMPS_KEYTAB- Set to the path of a Kerberos keytab containing entries for theAMPSandHTTPSPNs.

<AMPSConfig>

...

<Authentication><Module>libamps-multi-authentication</Module><Options><Kerberos.SPN>${AMPS_SPN}</Kerberos.SPN><Kerberos.Keytab>${AMPS_KEYTAB}</Kerberos.Keytab></Options></Authentication><Admin><InetAddr>8085</InetAddr><FileName>stats.db</FileName><Authentication><Module>libamps-multi-authentication</Module><Options><Kerberos.SPN>${HTTP_SPN}</Kerberos.SPN><Kerberos.Keytab>${AMPS_KEYTAB}</Kerberos.Keytab></Options></Authentication></Admin><Transports><!-- Authentication enabled via the top-level Authentication configuration --><Transport><Name>json-tcp</Name><InetAddr>8095</InetAddr><Type>tcp</Type><Protocol>amps</Protocol><MessageType>json</MessageType></Transport></Transports><Modules><Module><Name>libamps-multi-authentication</Name><Library>libamps_multi_authentication.so</Library></Module></Modules>

...

</AMPSConfig>Success!

When AMPS starts up the following will be written to the AMPS log.

2019-04-23T13:51:11.2302690[1]info:29-0103AMPSauthenticationcreatingauthenticationcontextfortransport'amps-admin'2019-04-23T13:51:11.2302900[1]info:29-0103AMPSKerberosauthenticationenabledwiththefollowingoptions:[Kerberos.Keytab=/home/bamboo/blog/instance/HTTP-linux-ip-172-31-46-201.us-west-2.compute.internal.keytab][Kerberos.SPN=HTTP/ip-172-31-46-201.us-west-2.compute.internal]2019-04-23T13:51:11.2303670[1]info:29-0103AMPSKerberosauthenticationimportingservicename'HTTP@ip-172-31-46-201.us-west-2.compute.internal'2019-04-23T13:51:11.2303720[1]info:29-0103AMPSKerberosauthenticationacquiringcredentialsforservicename'HTTP@ip-172-31-46-201.us-west-2.compute.internal'2019-04-23T13:51:11.2564880[1]info:29-0103AMPSauthenticationcontextcreatedsuccessfully2019-04-23T13:51:11.2564950[1]info:29-0103AMPSauthenticationcreatingauthenticationcontextfortransport'json-tcp'2019-04-23T13:51:11.2565040[1]info:29-0103AMPSKerberosauthenticationenabledwiththefollowingoptions:[Kerberos.Keytab=/home/bamboo/blog/instance/AMPS-linux-ip-172-31-46-201.us-west-2.compute.internal.keytab][Kerberos.SPN=AMPS/ip-172-31-46-201.us-west-2.compute.internal]2019-04-23T13:51:11.2565270[1]info:29-0103AMPSKerberosauthenticationimportingservicename'AMPS@ip-172-31-46-201.us-west-2.compute.internal'2019-04-23T13:51:11.2565280[1]info:29-0103AMPSKerberosauthenticationacquiringcredentialsforservicename'AMPS@ip-172-31-46-201.us-west-2.compute.internal'2019-04-23T13:51:11.2609840[1]info:29-0103AMPSauthenticationcontextcreatedsuccessfullyBelow is the logging for a successful authentication. In this log snippet we can see the following:

- Client connection

- Client logon (with the password field set to the base64 encoded kerberos token)

- Logging related to the authentication process including success messages

- Client session info

- Logon response (with the password field set to the base64 encoded kerberos response token)

2019-04-23T14:20:37.8828830[30]info:07-0023NewClientConnection:clientinfo:clientname=AMPS_A-json-tcp-3-212422832437882870description=172.31.46.201:52502->172.31.46.201:80952019-04-23T14:20:37.8830300[15]trace:12-0010client[AMPS_A-json-tcp-3-212422832437882870]logoncommandreceived:{"c":"logon","cid":"0","client_name":"KerberosExampleClient","user_id":"60east","mt":"json","a":"processed","version":"develop.c75d0e8.256701:c++","pw":"YIICmwYJKoZIhvcSAQICAQBuggKKMIIChqADAgEFoQMCAQ6iBwMFACAAAACjggGWYYIBkjCCAY6gAwIBBaEUGxJDUkFOS1VQVEhFQU1QUy5DT02iPjA8oAMCAQOhNTAzGwRBTVBTGytpcC0xNzItMzEtNDYtMjAxLnVzLXdlc3QtMi5jb21wdXRlLmludGVybmFso4IBLzCCASugAwIBEqEDAgECooIBHQSCARkk+zZFgqOGk2kNVzI3R8MC839gvYWPUcKeNOLu2vQvHxpfT5eyW272Y6qXrttx2J4S7ccRjlwGRPxjITFGHtiGM4T7CC3DNwPieYH2qhU3pIjsDldBUqVLnNhdkwAFaj+H2gw/UIudc8DHhNAfIL8xXc3qlun/iw1zE4gsSw8NkqJewbrNLY9Q5wgpFScKGGhtmrSTelAERzp4X6Qsju5IGtVTIhzngq45sAmhiW/tqT8u5TS8mSoILYm/e8QseL24FYPwt7mueD/U4Lo27bsD4HkAMQ1OHQXPm0rp+zRz2js5A1dAQXcSLWB67016iGfto01qR+TorjKpGbM/kJX2DzfjVWiu2olcoD0CaApMQSRklN4pzoFoCqSB1jCB06ADAgESooHLBIHI1PJpEsljIQpzSi3tJHA/DIpjj25ODoOYNkbxSvHCZB22t0r+sqgxVycwnGEI8C4b8BLCp7PW8iHm0UWl2r3osNCjT8EuNFc7jAoyQIbIRRMoGH50BUzQbxGjz1th3WKzs7dlG6vOEcKXPJYGHq0hguc2lScBpXDzqw+f/wIGVdcsNGyHoY3yBXvo600pAxVaJv+jxx4X+9FbDoIUd8/l8KLXxt6ocdmMGutAec0IlO8ksUkbIOjDlaYiv9fOeoVbL1mSyOLmWTA="}2019-04-23T14:20:37.8830810[28]info:29-0103AMPSauthenticationexecutingauthenticationforuser'60east'usingKerberos2019-04-23T14:20:37.8830900[28]info:29-0103AMPSKerberosauthenticationacceptingKerberossecuritycontextwithinputtokenlengthof671bytes2019-04-23T14:20:37.8855330[28]info:29-0103AMPSKerberosauthenticationcreatingoutputtokenlengthof156bytes2019-04-23T14:20:37.8855780[28]info:29-0103AMPSKerberosauthenticationsuccessfullyprocessedsecuritycontextforuser'60east@CRANKUPTHEAMPS.COM'2019-04-23T14:20:37.8855820[28]info:29-0103AMPSKerberosauthenticationsuccessfullyauthenticateduser'60east'2019-04-23T14:20:37.8855830[28]info:29-0103AMPSauthenticationsuccessfullyauthenticateduser'60east'usingKerberos2019-04-23T14:20:37.8856330[29]info:1F-0004[AMPS_A-json-tcp-3-212422832437882870]AMPSclientsessionlogonfor:KerberosExampleClientclientsessioninfo:clientauthid='60east'clientnamehash=15396145143580885467clientversion=develop.c75d0e8.256701:c++lastackedclientseq=0lasttxlogclientseq=0correlationid=2019-04-23T14:20:37.8856600[29]trace:17-0002client[KerberosExampleClient]acksent:{"c":"ack","cid":"0","user_id":"60east","s":0,"bm":"15396145143580885467|0|","client_name":"KerberosExampleClient","a":"processed","status":"success","pw":"YIGZBgkqhkiG9xIBAgICAG+BiTCBhqADAgEFoQMCAQ+iejB4oAMCARKicQRvupwwcjivlf4T1wY+bfhi4i3qBrTnZ5toPbXyR7O3syB8WyFCA5iepf3bJ/44HRo0PKQQVSmpH1hhWtdx+T9HUWmLOiRG+lu6TCsF7RBr6pjsW+V8/iHzOTaD5/T5gxHlQIFl/nSWH8pyS/1B8EqL","version":"develop.310283.6f81d4a"}AMPS Client Support

60East provides an implementation of an authenticator for each of the client libraries. These authenticators use the features of that individual programming language to provide the appropriate Kerberos token to AMPS.

The details of how to use each authenticator depend on the language, as described below.

Authenticating using Python

For Kerberos authentication using python there is a single module, amps_kerberos_authenticator, for authentication on both Linux and Windows.

The Python Kerberos authenticator code can be found in the amps-authentication-python github repo.

The Python Kerberos authenticator has two dependencies; the kerberos module for authentication on Linux and the winkerberos module for authentication on Windows.

In order to execute the below code the following needs to be done:

- The

USERNAMEandHOSTNAMEvariables need to be set to the user name you are authenticating as and the fully qualified name of the host AMPS is running on. - Kerberos credentials need to be available for the user you are authenticating as, thus

kinitneeds to be executed before the code is run or theKRB5_CLIENT_KTNAMEenvironment variable needs to be set to a keytab that has been generated for the user (note that this is for the user logging in, and should be a different keytab than the one AMPS is configured with).

importAMPSimportamps_kerberos_authenticatorUSERNAME='username'HOSTNAME='hostname'AMPS_SPN='AMPS/%s'%HOSTNAMEAMPS_URI='tcp://%s@%s:8095/amps/json'%(USERNAME,HOSTNAME)defmain():authenticator=amps_kerberos_authenticator.create(AMPS_SPN)client=AMPS.Client('KerberosExampleClient')client.connect(AMPS_URI)client.logon(5000,authenticator)Authenticating using Java

For Kerberos authentication using Java there are two different Authenticator implmentations, one for GSSAPI based authentication and one for SSPI based authentication. GSSAPI is the only option for authentication when running on Linux, but it is supported on Windows as well. When using GSSAPI a JAAS configuration file is required. SSPI, on the other hand, uses Windows native system calls and thus is Windows only and does not require a JAAS configuration. In general, we recommend that AMPSKerberosGSSAPIAuthenticator is used when running on Linux and AMPSKerberosSSPIAuthenticator is used when running on Windows.

The Java Kerberos authenticator code can be found in the amps-authentication-java github repo.

The Java Kerberos authenticator has a number of dependencies that are detailed in the Maven POM file for the AMPSKerberos project.

Below are two different JAAS configuration options. The first example will use Kerberos credentials in the user’s Kerberos credentials cache or will prompt the user for a password to obtain the credentials. The second example utilizes a keytab to obtain the Kerberos credentials. When a JAAS configuration is utilized the java.security.auth.login.config property needs to be set to the path to the config file and the config file entry name needs to be passed to the AMPSKerberosGSSAPIAuthenticator along with the SPN.

TestClient {com.sun.security.auth.module.Krb5LoginModule requiredisInitiator=trueprincipal="username"useTicketCache=truestoreKey=true};TestClient {com.sun.security.auth.module.Krb5LoginModule requiredisInitiator=trueuseKeyTab=truekeyTab="/path/to/username.keytab"principal="username@REALM"storeKey=truedoNotPrompt=true};In order to execute the below code the following needs to be done:

- If using GSSAPI, create the appropriate JAAS config, uncomment the

AMPSKerberosGSSAPIAuthenticatorline and specify-Djava.security.auth.login.config=/path/to/jaas.confwhen starting the app. - If running on Windows and using SSPI uncomment the

AMPSKerberosSSPIAuthenticatorline. - The

usernameandhostnamevariables need to be set to the user name you are authenticating as and the fully qualified name of the host AMPS is running on.

importcom.crankuptheamps.authentication.kerberos.AMPSKerberosGSSAPIAuthenticator;importcom.crankuptheamps.authentication.kerberos.AMPSKerberosSSPIAuthenticator;importcom.crankuptheamps.client.Authenticator;importcom.crankuptheamps.client.Client;importcom.crankuptheamps.client.exception.ConnectionException;publicclassKerberosAuthExample{publicstaticvoidmain(String[]args)throwsConnectionException{Stringusername="username";Stringhostname="hostname";Stringamps_spn="AMPS/"+hostname;Stringamps_uri="tcp://"+username+"@"+hostname+":8095/amps/json";// Authenticator authenticator = new AMPSKerberosGSSAPIAuthenticator(amps_spn, "TestClient");// Authenticator authenticator = new AMPSKerberosSSPIAuthenticator(amps_spn);Clientclient=newClient("KerberosExampleClient");try{client.connect(amps_uri);client.logon(5000,authenticator);}finally{client.close();}}}Authenticating using C++

For Kerberos authentication using C++ there are two different Authenticator implmentations, one for GSSAPI based authentication and one for SSPI based authentication. GSSAPI is the only option for authentication when running on Linux, but, unlike Java, it is not supported on Windows. Specifically, AMPSKerberosGSSAPIAuthenticator is used when running on Linux and AMPSKerberosSSPIAuthenticator is used when running on Windows.

The C++ Kerberos authenticator code can be found in the amps-authentication-cpp github repo.

The C++ Kerberos authenticator for Linux (GSSAPI) has a dependency on the GSSAPI libs that are part of the krb5 distribution.

In order to execute the below code the following needs to be done:

- The

usernameandhostnamevariables need to be set to the user name you are authenticating as and the fully qualified name of the host AMPS is running on.

#include <ampsplusplus.hpp>#include <string>#ifdef _WIN32#include "AMPSKerberosSSPIAuthenticator.hpp"#else#include "AMPSKerberosGSSAPIAuthenticator.hpp"#endifintmain(){std::stringusername("username");std::stringhostname("hostname");std::stringamps_spn=std::string("AMPS/")+hostname;std::stringamps_uri=std::string("tcp://")+username+"@"+hostname+":8095/amps/json";#ifdef _WIN32AMPS::AMPSKerberosSSPIAuthenticatorauthenticator(amps_spn);#elseAMPS::AMPSKerberosGSSAPIAuthenticatorauthenticator(amps_spn);#endifAMPS::Clientclient("KerberosExampleClient");client.connect(amps_uri);client.logon(5000,authenticator);}Authenticating using C#/.NET

For Kerberos authentication using C# there is a single module, AMPSKerberosAuthenticator, for authentication on Windows.

The C# Kerberos authenticator code can be found in the amps-authentication-csharp github repo.

The C# Kerberos authenticator has a single dependency on the NSspi NuGet package.

In order to execute the below code the following needs to be done:

- The

usernameandhostnamevariables need to be set to the user name you are authenticating as and the fully qualified name of the host AMPS is running on.

usingAMPS.Client;usingAMPSKerberos;classKerberosAuthExample{staticvoidMain(string[]args){stringusername="username";stringhostname="hostname";stringamps_spn=string.Format("AMPS/{0}",hostname);stringamps_uri=string.Format("tcp://{0}@{1}:8095/amps/json",username,hostname);AMPSKerberosAuthenticatorauthenticator=newAMPSKerberosAuthenticator(amps_spn);using(Clientclient=newClient("KerberosExampleClient")){client.connect(amps_uri);client.logon(5000,authenticator);}}}Server Chooser

When using a server chooser the get_authenticator function needs to be implemented and it must return

the appropriate authenticator for the server you are connecting to. In the case that the server chooser

is configured to return URIs for different hosts, then an authenticator configured with the correct SPN

needs to be returned.

Below is an example of a server chooser implementation in python that uses multiple hosts.

importAMPSimportamps_kerberos_authenticatorfromurlparseimporturlparseUSERNAME='username'HOSTNAME1='hostname1'HOSTNAME2='hostname2'classKerberosAuthServerChooser(AMPS.DefaultServerChooser):def__init__(self):super(KerberosAuthServerChooser,self).__init__()self.authenticators={}defget_current_authenticator(self):hostname=urlparse(self.get_current_uri()).hostnameifhostnameinself.authenticators:returnself.authenticators[hostname]spn='AMPS/%s'%hostnameauthenticator=amps_kerberos_authenticator.create(spn)self.authenticators[hostname]=authenticatorreturnauthenticatordefreport_failure(self,exception,connectionInfo):printexceptiondefmain():client=AMPS.HAClient('KerberosExampleClient')chooser=KerberosAuthServerChooser()chooser.add('tcp://%s@%s:8095/amps/json'%(USERNAME,HOSTNAME1))chooser.add('tcp://%s@%s:8095/amps/json'%(USERNAME,HOSTNAME2))client.set_server_chooser(chooser)client.connect_and_logon()if__name__=='__main__':main()Admin Interface Authentication

With an OS and browser that is properly configured for Kerberos authentication, it is possible to authenticate against the AMPS Admin Interface using Kerberos via a browser.

The curl command can also be used to authenticate against the admin interface when the OS is properly configured and curl has been built with Kerberos support.

`curl -v --negotiate -u : http://hostname:admin_port/amps/instance/config.xml`It is also possible to programmatically authenticate with the admin interface. Below is an example using python.

#!/usr/bin/env pythonimportamps_kerberos_authenticatorimportrequestsHOSTNAME='hostname'ADMIN_PORT=0HTTP_SPN='HTTP/%s'%HOSTNAMEADMIN_URL='http://%s:%s/amps/instance/config.xml'%(HOSTNAME,ADMIN_PORT)defmain():authenticator=amps_kerberos_authenticator.create(HTTP_SPN)token=authenticator.authenticate(None,None)# No user and no return tokenr=requests.get(ADMIN_URL,headers={'Authorization':'Negotiate %s'%token})printr.textReplication Authentication

Securing AMPS for replication requires having a replication Transport with Authentication enabled as well the use of an Authenticator in the Replication/DestinationTransport. The libamps_multi_authenticator module provides the client side authentication support for the replication connection.

The below sample AMPS configuration uses environment variables for the Kerberos configuration elements. The following variables would need to be set correctly for this config to function as expected. A second instance would also need to be configured.

AMPS_A_SPN- Set toAMPS/hostnamewherehostnameis the fully qualified name of the host AMPS A is running on.AMPS_B_SPN- Set toAMPS/hostnamewherehostnameis the fully qualified name of the host AMPS B is running on.AMPS_A_KEYTAB- Set to the path of a Kerberos keytab containing entries for theAMPSSPN for the A instance.AMPS_USER_KEYTAB- Set to the path of a Kerberos keytab that contains an entry for the user principal that you want to use to authenticate to AMPS B.

<AMPSConfig><Name>AMPS_A</Name><Authentication><Module>libamps-multi-authentication</Module><Options><Kerberos.SPN>${AMPS_A_SPN}</Kerberos.SPN><Kerberos.Keytab>${AMPS_A_KEYTAB}</Kerberos.Keytab></Options></Authentication><Transports><!-- Authentication enabled via the top-level Authentication configuration --><Transport><Name>json-tcp</Name><InetAddr>8095</InetAddr><Type>tcp</Type><Protocol>amps</Protocol><MessageType>json</MessageType></Transport><!-- Authentication enabled via the top-level Authentication configuration --><Transport><InetAddr>8105</InetAddr><Name>amps-replication</Name><Type>amps-replication</Type></Transport></Transports><Replication><Destination><Name>AMPS_B</Name><SyncType>sync</SyncType><Transport><InetAddr>localhost:9999</InetAddr><Type>amps-replication</Type><Authenticator><Module>libamps-multi-authenticator</Module><Options><Kerberos.Keytab>${AMPS_USER_KEYTAB}</Kerberos.Keytab><Kerberos.SPN>${AMPS_B_SPN}</Kerberos.SPN></Options></Authenticator></Transport><Topic><MessageType>json</MessageType><Name>.*</Name></Topic></Destination></Replication><Modules><Module><Name>libamps-multi-authentication</Name><Library>libamps_multi_authentication.so</Library></Module><Module><Name>libamps-multi-authenticator</Name><Library>libamps_multi_authenticator.so</Library></Module></Modules>

...

</AMPSConfig>To Guard and Protect

In this post, I’ve covered the basics of setting up AMPS for Kerberos authentication. If your site uses Kerberos, the team that manages that installation will have definitive answers on how Kerberos is configured in your environment.

In this post, we’ve intentionally kept the focus on AMPS. We haven’t delved into the depths of troubleshooting Kerberos installations, or the considerations involved in setting up a Kerberos infrastructure. We encourage you to work with the team responsible for your company’s Kerberos installation when configuring AMPS, since many of the most common problems in setting up Kerberos authentication are a matter of ensuring that the credentials provided match what the Kerberos server expects – which is most easily done when the owners of the Kerberos configuration are also involved.

We’re very proud to have Kerberos support available out of the box with AMPS. Let us know how the Kerberos support works for you!

Over the last several days, the technology world has been focused on the impact of the Meltdown and Spectre vulnerabilities. There are several good articles published about these vulnerabilities, among them

Over the last several days, the technology world has been focused on the impact of the Meltdown and Spectre vulnerabilities. There are several good articles published about these vulnerabilities, among them  One of the most common requirements for AMPS instances is integration with an enterprise security system. In this blog post, we’ll show you the easiest way to get an integration up and running – by building an authentication and entitlement system from scratch!

One of the most common requirements for AMPS instances is integration with an enterprise security system. In this blog post, we’ll show you the easiest way to get an integration up and running – by building an authentication and entitlement system from scratch! This month marks the 10 year anniversary of AMPS being deployed into production environments, helping to fuel the global financial markets. Those first customer deployments built on AMPS are still in production, and are still critical infrastructure today!

This month marks the 10 year anniversary of AMPS being deployed into production environments, helping to fuel the global financial markets. Those first customer deployments built on AMPS are still in production, and are still critical infrastructure today! One of the most popular features of AMPS is the State-of-the-World (or SOW), which allows applications to quickly retrieve the most current version of a message. Many applications use the SOW as a high-performance streaming database, quickly retrieving the current results of the query and then automatically receiving updates as changes occur to the data.

One of the most popular features of AMPS is the State-of-the-World (or SOW), which allows applications to quickly retrieve the most current version of a message. Many applications use the SOW as a high-performance streaming database, quickly retrieving the current results of the query and then automatically receiving updates as changes occur to the data. From the beginning, AMPS has been content aware. Most AMPS applications use content filtering, and features like the State-of-the-World, delta messaging, aggregation, and message enrichment all depend on AMPS being able to parse and filter messages.

From the beginning, AMPS has been content aware. Most AMPS applications use content filtering, and features like the State-of-the-World, delta messaging, aggregation, and message enrichment all depend on AMPS being able to parse and filter messages.

60East is proud to announce the release of AMPS 5.3 — the most

fully-featured and easy to use version of AMPS yet!

60East is proud to announce the release of AMPS 5.3 — the most

fully-featured and easy to use version of AMPS yet! Select Lists is a new feature introduced in our 5.3 release of the AMPS server.

This feature lets you declare a subset of fields for your application to

receive when querying or subscribing to a topic. AMPS client applications no

longer need to receive a full message when the application will only use

part of the message. This feature, when combined with existing filter

functionality, provides developers with new methods to efficiently manage

the volume of data flowing back to their applications from the AMPS server.

Select Lists is a new feature introduced in our 5.3 release of the AMPS server.

This feature lets you declare a subset of fields for your application to

receive when querying or subscribing to a topic. AMPS client applications no

longer need to receive a full message when the application will only use

part of the message. This feature, when combined with existing filter

functionality, provides developers with new methods to efficiently manage

the volume of data flowing back to their applications from the AMPS server.

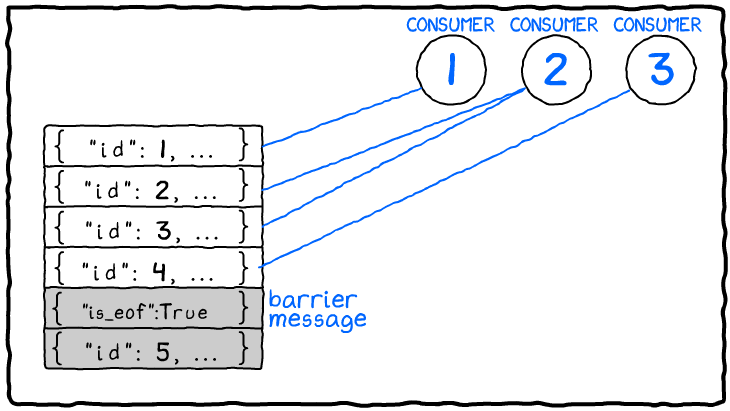

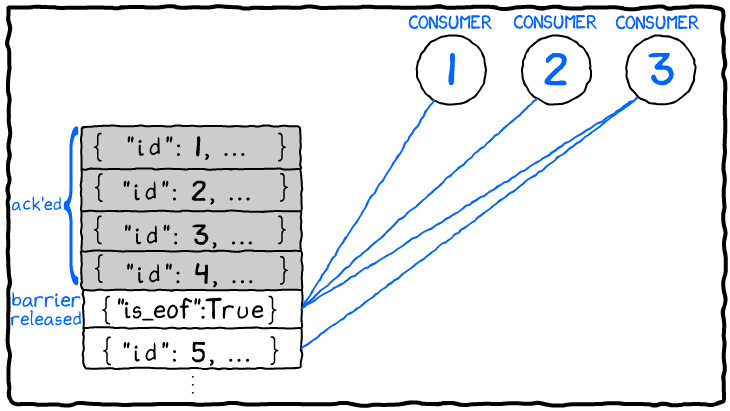

Scaling out your data processing using AMPS queues allows you to dynamically adjust how many workers you apply to your data based on your needs and your computing resources. Larger orders coming in or more events to process? Just spin up more subscribers to your AMPS queue and let them take their share of the load. AMPS dynamically adjusts how many messages it gives to each consumer based on their available backlog and message processing rate, and your work gets done without needing costly reconfiguration or re-partitioning.

Scaling out your data processing using AMPS queues allows you to dynamically adjust how many workers you apply to your data based on your needs and your computing resources. Larger orders coming in or more events to process? Just spin up more subscribers to your AMPS queue and let them take their share of the load. AMPS dynamically adjusts how many messages it gives to each consumer based on their available backlog and message processing rate, and your work gets done without needing costly reconfiguration or re-partitioning.

ag-Grid 22.1.1

ag-Grid 22.1.1 Sencha Ext JS 7.0.0

Sencha Ext JS 7.0.0 Kendo UI Grid 2019.3.1023

Kendo UI Grid 2019.3.1023 w2ui 1.5

w2ui 1.5 FancyGrid 1.7.87

FancyGrid 1.7.87 Webix DataTable 4.3.0

Webix DataTable 4.3.0

Even if you can’t make it to the great outdoors, AMPS now makes it easy to visit a range of data in the transaction log.

Even if you can’t make it to the great outdoors, AMPS now makes it easy to visit a range of data in the transaction log.

Tabulator 4.9

Tabulator 4.9 FXB Grid

FXB Grid Wijmo Grid 5.20203.766

Wijmo Grid 5.20203.766 RevoGrid 2.9.0

RevoGrid 2.9.0 Smart Grid 0.3.1

Smart Grid 0.3.1 SyncFusion DataGrid 18.4.39

SyncFusion DataGrid 18.4.39

AMPS is used for a wide variety of applications, from extreme low-latency applications with a latency budget of less than a millisecond roundtrip to applications that aggregate millions of fast changing records that intentionally conflate updates to reduce load on a user interface. All of these applications have one thing in common, though: AMPS runs on x64 Linux, so application developers need to have access to a Linux installation to develop against AMPS.

AMPS is used for a wide variety of applications, from extreme low-latency applications with a latency budget of less than a millisecond roundtrip to applications that aggregate millions of fast changing records that intentionally conflate updates to reduce load on a user interface. All of these applications have one thing in common, though: AMPS runs on x64 Linux, so application developers need to have access to a Linux installation to develop against AMPS. Over the last several days, a remote code execution vulnerability (

Over the last several days, a remote code execution vulnerability ( From Day-1, we’ve built AMPS to be content aware, yet message-type agnostic. As such, we’re often asked which message-type we think is best. The best message type, in most situations, is dependent on the use-case. In this article, we drill-down into what factors you should consider when selecting a message type, the benefits/drawbacks of each message-type and the functionality trade-offs specifically when it comes to AMPS.

From Day-1, we’ve built AMPS to be content aware, yet message-type agnostic. As such, we’re often asked which message-type we think is best. The best message type, in most situations, is dependent on the use-case. In this article, we drill-down into what factors you should consider when selecting a message type, the benefits/drawbacks of each message-type and the functionality trade-offs specifically when it comes to AMPS.